Intro

One of the most groundbreaking scientific theories of all time is quantum mechanics. It can be used in many interesting fields such as quantum tunneling, which is a kind of teleportation.

Although quantum mechanics tells us that we can not know the speed and position of a particle at the same time, the theory enables us to build quantum computers that could solve a problem in seconds that would take an ordinary computer billions of years to solve.

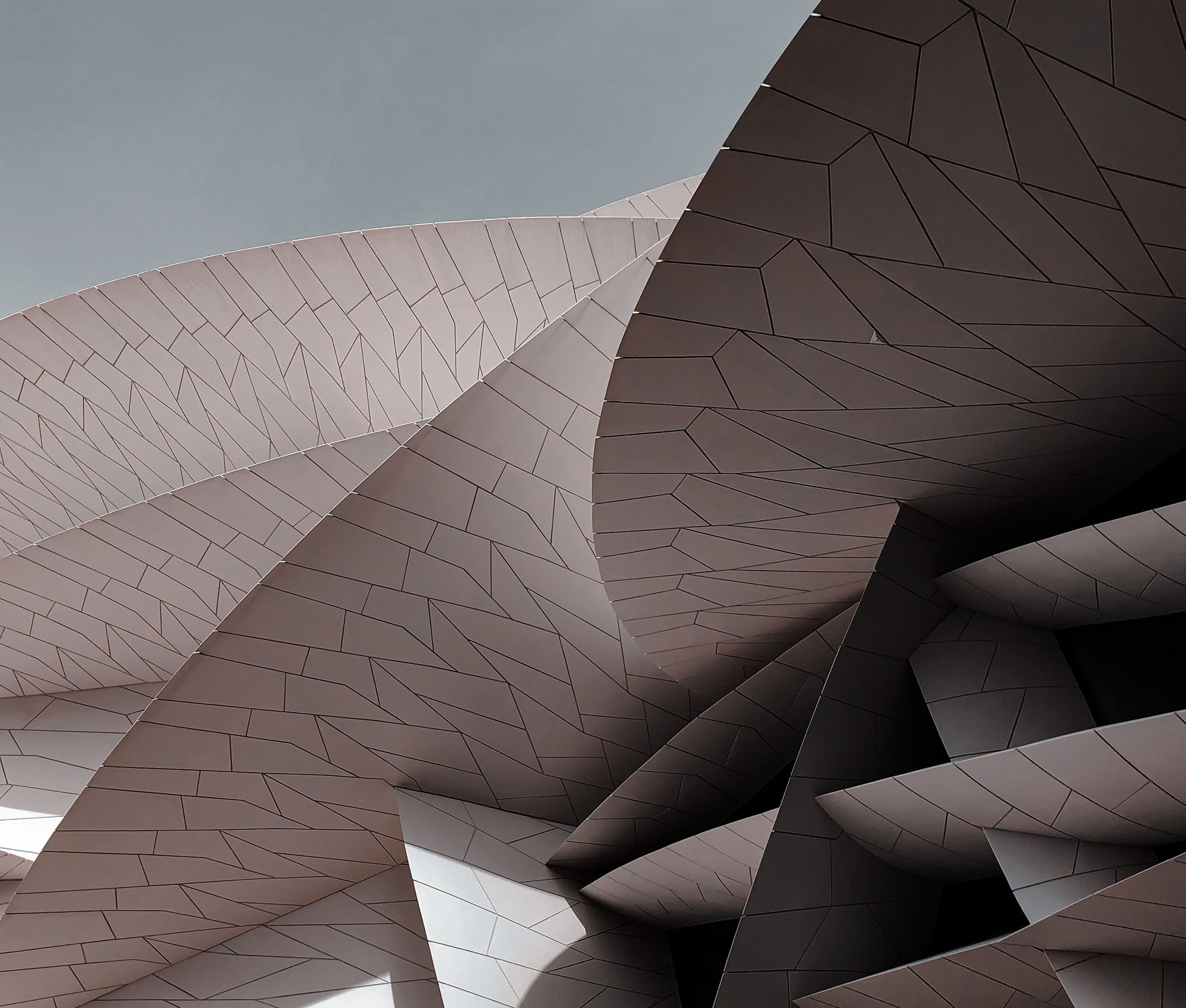

In quantum physics we find that the electron orbits the nucleus of an atom in very interesting shapes, like the one below. These shapes are called states.

The states an electron is in can be explained with a vector and two different states are always orthonormal to each other.

Concept

We can define vector space as:

The continuous region within which all vectors exist and moves

We can think of a vector as being able to move in two different ways, without leaving the vector space:

Through being added by another vector

Through being multiplied by any number

Lastly, the vectors' capability of being moved by any amount, correspond to continuity.

Math

Formally, a vector space is a non-empty set of objects obeying addition and scalar multiplication according to the rules known as the ten vector space axioms.

Let , , and be in , and let and be scalars. Then is a vector space if and only if:

is closed under addition; that is, if and are in , then is in

contains an object (called the zero vector) that behaves like an additive zero in the sense that for every in

For each object in , there is an object in (called the negative of ) such that

is closed under multiplication: that is, if is in and is scalar, then is in .

General vector spaces

Introduction

This is the beginning of the end, at least for a conventional linear algebra course. We have studied vectors, matrices and spaces. Many people think that these concepts are quite abstract, but everything is relative. Mathematicians often like to distinguish between the categories of mathematics and calculations, where linear algebra belongs to the latter. This may sound provocative, especially after hard work to learn the content, but the intention is purely academic. We intend that this lecture note should be helpful for the student to appreciate the differences, and hopefully inspire them to learn more about the world of abstract algebra.

Mathematics is a logical endeavor, much like the studies of philosophy and law. It requires analytical and abstract thinking, because the goal is to prove insights, and to see if found relationships can be generalized. Sometimes, a discovery is merely a practical tip of the ice berg, where the truth beneath the surface, can be proven to be much greater.

A generalization of vector spaces

We generalize the concept of vector space by specifying the requirements that allow a general set of objects with two operations to be seen as a vector space. We now have a practical association with addition, scalars, multiplication and vectors.

Generalization does not mean that we add information. This means that we subtract information.

The generalization process does not mean that we add knowledge to the context, it means on the contrary that we subtract learned knowledge. We begin with this brief summary of the core properties of subspaces:

All subspaces are closed under scalar multiplication and addition.

All subspaces contain the vector and the vectors in the subspace satisfy the algebraic properties from addition and scalar multiplication.

Now we take the next step by defining what we mean by addition and scalar multiplication (remember - we generalize concepts). Try reading the following sections for the first time.

The vector space axioms

Let be a nonempty set of objects, of which we introduce two operations:

By addition we mean a rule for associating with each pair of objects and in a unique object that we regard to the be sum of and .

By scalar multiplication we mean a rule for associating with each scalar and each object in a unique object that we regard to be the scalar product of by .

Note that we reuse the terms addition and multiplication, but we are open to how the operations are defined. We call a vector space and we call the objects , and vectors if they satisfy the following ten axioms called the vector space axioms:

If and are objects in , and if and are scalars, then we call the set a vector space if the below axioms are satisfied for the two operations addition and scalar multiplication respectively:

is closed under addition; that is, if and are in , then is in

contains an object (called the zero vector) that behaves like an additive zero in the sense that for every in

For each object in , there is an object in (called the negative of ) such that

is closed under multiplication: that is, if is in and is scalar, then is in .

Thinking abstractly is the process of letting go of delimiting conditions and trying to think minimalistically and newly. If the above statements did not invite a challenge, perhaps the following statement can do just that:

If is a vector in a vector space , and if is a scalar, then we have:

Look at the first property from above theorem:

The first property of the above theorem is so intuitive that it makes it difficult to suggest anything else, right? Surely a scalar should result in the zero vector , when multiplied with any vector ? Well, of course you get that result with the conventional definition of scalar multiplication, which means that we multiply the scalar by each component of the vector . But what if we leave the precise definition of the operation, and approach this with an abstract mindset. Let's say we do not know how the operation of scalar multiplication works. What we do know are the original ten vector space axioms. Here is a six-step proof of which is supported only by the ten vector space axioms:

To the right of each step we have , referring to which of the ten axioms has been used to support the result. The step refers to the property of real numbers. See how abstract each step in the proof is, and how we do not divide the vectors into components. Therefore, the proof applies to all objects that may be called vectors for all sets called vector space. We summarize here the three proofs for the three statements in the above theorem:

where the last line of the last proof refers to the result of the first proof. This is a beautiful concept, both used in mathematics as in philosophy, that we reuse our results from previous reasoning to prove new ones. We now move forward with three examples of vector spaces, namely function spaces, polynomial spaces and matrix spaces.

Function spaces

A commonly visited vector space in higher studies in mathematics are function spaces, and especially real-valued ones. Let's go from our conventional view of a vector so that:

and move to regarding each component of to represent a function value. Let's say that we have

which gives us:

In the example above, we refer the vector as a 3-tuple, which means a finite sequence of three. In general, we are talking about n-tuples. If we would plot the vector we would have three points:

in a two-dimensional graph with the axes as horizontal axis and as the vertical axis. But, we are used from calculus to plotting graphs across the board with real numbers, which means we can take it to the next level and consider:

We can extend our notion of a function valued vector to cover every single real number, giving us the vector with infinitely many components, one for each real number:

So we have that and that the component for that vector is . Pretty cool huh? We here denote the set of real-valued functions that are defined for all real values of by , and let's agree that two functions belonging to this set, and , are considered to be equal if, and only if,

where the sign means "for all". So how should we define the operators addition and scalar multiplication? this is typically kept simple and conventionally defined as:

Such a function space , with the above operations for addition and scalar multiplication, is defined as a vector space because it satisfies all of the vector space axioms.

Polynomial spaces

Taking it from function spaces, we can let be a non-negative integer and let be the set of all real-valued functions in the form:

where are real numbers. This means that is the set of all polynomials of degree or less. We have that , with the same definitions for addition and scalar multiplication as for , is a vector space. Even more, it's a subspace to . To show this we need to show that is closed under addition and scalar multiplication. Let's have that and are in :

Thus, we have for the scalar multiplication:

As for the addition operation we have that:

which shows that and both are polynomials of degree or less, hence belonging to the polynomial space .

The polynomial space is interesting because we can define a beautiful basis for this vector space. We remember that the definition of a basis for a vector space is that the basis should be linearly independent and span the entire space. This means that we should have a unique linear combination for each of the polynomials to . There are several bases that serve this purpose, here comes the trivial one:

Do you see what a linear combination of this basis would look like? For each we have a unique set of coordinates such that:

Isn't this beautiful?

Matrix spaces

Another fascinating variant of vector spaces are matrix spaces. Sure, it may sound counterintuitive to consider matrices as vectors. But remember - a generalization is not adding information, it is subtracting the limitations of what is already known. So, let's say that is the matrix space with all the matrices with real numbers. This means, that vectors belong to this matrix space, which we recognize, according to our old definition, as a vector. We let addition and scalar multiplication operations for matrices be the same as we are used to seeing them. Then we have that this space is closed under addition and scalar multiplication, because the dimensions of the vectors do not change. Let us take a more concrete example, and examine the following matrix space of matrices. What would a suitable basis look like? Again, there are infinite number of bases to choose from, but we keep it simple. We have that

form a basis for , since for each in this vector space we have that:

Hence, forms a basis for .

An unusual vector space

Now is the time for an intellectual challenge. We have given several examples of vector spaces, but they have all had the conventional definitions of addition and scalar multiplication. We will now introduce two new operations, while also consider real numbers as vectors.

Let's consider as the set of all positive real numbers, which we call vectors and denote the following notation , and . For any real number and any vectors and in we define the operations and as our addition and scalar multiplication respectively, according to the following:

(addition)

(scalar multiplication)

Both and are positive numbers, so they belong to the space and the results are therefore considered as vectors to that space. This is a good exercise in generalization, where we subtract what we know and consider something else. But does this makes sense? are the ten vector space axioms fulfilled for this set with the above operations and ? If so, is a vector space. We confirm the axioms below. It makes a pretty solid list, but there are no shortcuts to confirm each axiom. Take your time and read carefully.

Let be the set of all real positive numbers and let and scalar be any real number. For the following two definitions for addition and scalar multiplication respectively,

(addition)

(scalar multiplication)

we have that is a vector space.

A note for axiom 7 - the conventional definition for addition applies for scalars between each other, since they are not considered as vectors of . However, a scalar multiplied by a vector of must follow the new definitions.